Prompt Genius

When prototyping applications I often need stock images. Stable diffusion allows me to generate stock images for free with no royalties. I can generate anything from logos and graphic designs to photos and building interiors. I no longer have to use sites like Unsplash, istock or shutterstock to have placeholder images for application designs.

This was also a good opportunity to showcase my skillset.

The Original Sketch

Functional Requirements

- ImageGen - Image generation

- MotionStudio - Video generation

- Chatter - LLM

- Composer - Music Generator

- Vocalizer - Voice generator

Non Functional Requirements

UI Design

The Wireframes

This was the original wireframe for this project. It helped me get an idea of the type of content that would be in the app. At first it seemed a little too busy, but I realized I wanted this app to do a lot of things, so the busyness didn't bother me much. The layout in here isn't attractive and I felt the generated images should get most of the focus (in this layout the only image you see is in the bottom right corner. I used Escalidraw to design the wireframe.

Mockup

As mentioned, in this case I didn't have to design a UI as I copied one I liked. I used TailwindCSS as my CSS framework and Heroicons and Bootstrap icons. I use Affinity Designer & Affinity Photo for any Mockups I need.

Moodboard

I typically begin by doing some research to find other people who designed or are building something similar. Typically I do a mashup of all of the ideas I like along with my own ideas. In this case, there was one particular design I liked so much that I just copied it almost verbatim. I get my inspiration from Dribbble or Behance.

The Prototype

I usually prototype with Vanilla JS, HTML and CSS. I have a stylesheet I used that adds a 1px black border to all elements with a little padding. This helps me see the layout as I'm building.

Schema

I decided this app wouldn't need a relational database since I'm simply sending data to the Draw Things app via a request to the Flask server which generates an image and saves it to a file.

The Stack

I initially thought to use Flask and Jinja with a little VueJS sprinkled on top, but the templating languages for Vue and Jinja conflicted (they both use double mustaches). I had been hearing about HTMX here and there and watched a video on it one day. I liked it and decided to try it out in this project. While reading the HTMX documentation I saw that they recommended using AlpineJS. After learning how Alpine works (which is much like VueJS) I decided to go with AlpineJS to make the app a bit snappier.

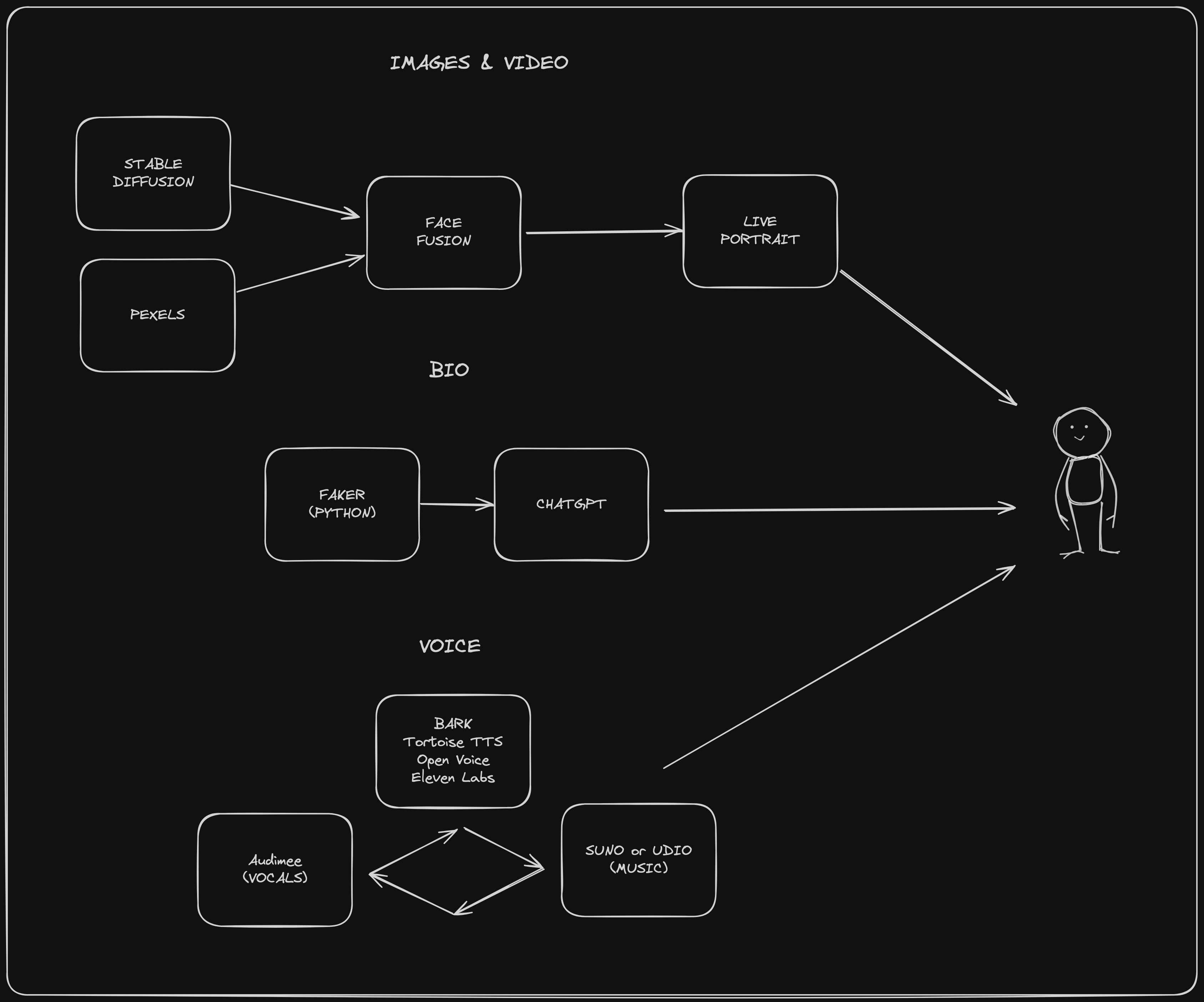

I also used bash scripts to run other python applications in their virtual environments. The bash scripts allow me to send information from the Flask application to other applications like Suno Bark, Tortoise TTS, Facefusion etc.

The Business Logic

A lot of tutorials (if not all) don't really get into business logic. But business logic is really the guts of any app. I usually plan this up-front but I jumped into coding to get my ideas realized and out of my head. An overview of what this app contains is below:

The idea is to have 7 sections:

Image-Gen: A wildcard Stable Diffusion prompt generator that sends requests to the Draw Things app via it's HTTP server.

Bio-Gen - Generates a profile for a person using the Python Faker library.

Motion Studio - Uses Deepfake technology to turn a face into a video of a person. This is useful for commercials and courses where instructors can be switched out.

Vocalizer - Uses AI voice generation tools like Suno Bark , Tortoise TTS and ElevenLabs to generate speech from text. Useful for voiceovers and tutorials.

Composer - Uses AI music generation tools such as Music Gen by Facebook and Suno Chrip to generate copyright free music. I used this in my Spotify clone.

Chatter - A customizable AI chatbot that uses Open AI's ChatGPT and the OpenAI library for Python (you will need an API key and a monthly subscription). Suno Bark & Tortise TTS could be used to make it talk, but the processing time is too long for it to be beneficial. I could use the operating systems speech system however.

Modeler - This area could generate 3D models for video games, but I haven't researched how that all works.

The Process

I was scribbling in Freeform on IOS to see what it'd be good for, and while scribbling I had an idea. What if I made an app that uses Stable Diffusion to generate graphics for me to make my prototyping workflow faster?

This quickly evolved into, "What if I created an app that used the coolest new Artificial Intelligence technologies into one app to make my prototyping workflow faster?" That's when I started to sketch the idea for Prompt Genius.

I originally created Prompt Genius as a desktop application made with the CustomTkinter python GUI library that integrates a custom stable diffusion wildcard prompt generator.

To have more flexibility with the user interface, I decided to rebuild it using a brand new stack that I will call the SHAFT stack. This stack game me more flexibility. The SHAFT stack is comprised of SQLITE, HTMX, AlpineJS, Flask, and TailwindCSS.

Image-Gen

@app.route('/generate',methods=['POST'])

def generate():

form = request.form

url = "http://127.0.0.1:7860"

payload = {

"prompt": form['promptbox'],

"steps": int(form['steps']),

"seed": random.randrange(10000000,100000000),

"negative_prompt": form['negative'],

"model": form['model'],

'batch_size': int(form['batchSize'])

}

response = requests.post(url=f'{url}/sdapi/v1/txt2img', json=payload)

r = response.json()

for i in r['images']:

image = Image.open(io.BytesIO(base64.b64decode(i.split(",",1)[0])))

png_payload = {

"image": "data:image/png;base64," + i

}

response2 = requests.post(url=f'{url}/sdapi/v1/png-info', json=png_payload)

image.save('./static/images/output.png')

return redirect(url_for('home'))

Bio-Gen

@app.route('/bio-gen')

def bioGen():

if request.method == 'GET':

fake = Faker()

person = fake.profile()

person['eye_color'] = random.choice('red blue brown'.split(' '))

person['hair_style'] = random.choice('straight curly kinky braided twisted afro'.split(' '))

person['skin_type'] = random.choice('dark light fair smooth freckled'.split(' '))

print(person)

images = [_ for _ in os.listdir(os.path.join(app.static_folder, "images/fakePeople")) if _.endswith('png') or _.endswith('jpg') or _.endswith('PNG') or _.endswith('JPG')]

return render_template('profiler.html',person=person,images=images)Motion Studio

Vocalizer

Eventually the model that I want to run will be passed as a url parameter to be set by the user. I don't think that async stuff does me any good, so I'm going to remove it. Perhaps it should be a celery job?

@app.route('/vocalize',methods=['POST'])

async def speak():

mod = 'bark'

if mod=='bark':

model_to_run = 'bark'

elif mod=='tortoise':

model_to_run = 'tortoise'

text_to_speak = request.form['prompt']

await os.system(f"chmod +x ./scripts/{model_to_run}.sh && bash ./scripts/{model_to_run}.sh '{text_to_speak}'")

return redirect(url_for('home'))Composer

Composer generates song lyrics based on a text file of lyrics using a simple Markov Chain class written in python. These lyrics are used as prompts for Suno Chirp. You can also enter a song genre.

I used some prompt engineering in ChatGPT to get this but it works really well. Took me 3 attempts to get it working. I'm impressed by how simple this is compared to what I could find online. ChatGPT originally used numpy but I didn't want any libraries to be used. This was the result.

import random

class MarkovChainLyricsGenerator:

def __init__(self, order=2):

self.order = order

self.model = {}

def train(self, lyrics):

words = lyrics.split()

for i in range(len(words) - self.order):

current_state = tuple(words[i:i + self.order])

next_word = words[i + self.order]

if current_state not in self.model:

self.model[current_state] = []

self.model[current_state].append(next_word)

def generate_lyrics(self, length=100):

current_state = random.choice(list(self.model.keys()))

generated_lyrics = list(current_state)

for _ in range(length - self.order):

if current_state in self.model:

next_word = random.choice(self.model[current_state])

generated_lyrics.append(next_word)

current_state = tuple(generated_lyrics[-self.order:])

else:

break

return ' '.join(generated_lyrics)

# Example usage:

if __name__ == "__main__":

with open('./static/psalms.txt', 'r') as infile:

lyrics = "".join(infile.readlines())

generator = MarkovChainLyricsGenerator(order=2)

generator.train(lyrics)

generated_lyrics = generator.generate_lyrics(length=200)

print(generated_lyrics)Chatter

Chatter allows users to send text to either tortise or suno bark to generate audio. An API request is made to a flask endpoint that uses the os module to run a command line command to run a bash script. The bash script changes directory into the folder on my computer where tortise or bark is and runs commands to launch the virtual environments they run is and the commands to start the services.

Modeler or Game Studio The original idea for Modeler was to allow you to create 3D models, but I think it could end up being a studio for generating video game assets from textures, backgrounds, concept art to sound FX, music and shaders (using chatgpt).

Neat Trick Since I'm not deploying this anywhere, I wanted a quick way to launch the app on my Mac. I used Shortcuts to run a bash script that launch Draw things and Prompt Diffusion! I added it to my Dock and to my menu bar. I had to use the webbrowser library in the app to launch it.

I will be using Shortcuts more. I didn't know it can do all of what it does!

if __name__ == '__main__':

webbrowser.open_new('http://127.0.0.1:4000/')

app.run(debug=True,port=4000)

A much simpler path?

A much simpler path could be to use JS to send data directly to Draw Things. All other intense processing could be done with flask

<!DOCTYPE html>

<html lang='en'>

<head>

<meta charset='UTF-8'>

<meta http-equiv='X-UA-Compatible' content='IE=edge'>

<meta name='viewport' content='width=device-width, initial-scale=1.0'>

<title>Draw Things</title>

<script src='https://cdn.tailwindcss.com'></script>

</head>

<body>

<!-- The Form -->

<form id="dtForm" class="w-1/2 m-8 p-4 mx-auto bg-gray-200">

<input name="prompt" id="prompt" placeholder="prompt here">

<input name="negative_prompt" id="negative_prompt" placeholder="negative prompt here">

<input type="submit">

</form>

<!-- /The Form -->

<!-- Image Container -->

<div id="imageContainer" width="70%">

<img id="resultImage" src="" alt="" width="70%">

</div>

<!-- /Image Container -->

<!-- API Call -->

<script>

document.getElementById('dtForm').addEventListener('submit', function(event) {

event.preventDefault();

// Form Fields

let prompt = document.getElementById('prompt').value;

let negative_prompt = document.getElementById('negative_prompt').value;

// Add more fields to the form and load here if you'd like

// Payload/data to send

let payload = {

"prompt": prompt,

"negative_prompt": negative_prompt,

"seed": Math.floor(Math.random() * 100000000),

"steps": 20,

"guidance_scale": 7,

"batch_count": 1,

"width": 512,

"height": 512

};

// Make a POST request to DT

fetch(`http://127.0.0.1:7860/sdapi/v1/txt2img`, {

method: 'POST',

mode: 'no-cors',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify(payload)

})

.then(response => {

if (!response.ok) {

throw new Error('Network response was not ok');

}

return response.json();

})

.then(data => {

let imageContainer = document.getElementById('imageContainer');

imageContainer.innerHTML = '';

data.images.forEach(imageData => {

let imgElement = document.createElement('img');

imgElement.src = `data:image/png;base64,${imageData}`;

imageContainer.appendChild(imgElement);

});

});

});

</script>

</body>

</html>Updated Process Map Jul, 2024