Artificial Intelligence

Artificial intelligence (AI) is a branch of computer science focused on creating intelligent machines that can mimic human cognitive functions like learning, problem-solving, and decision-making. These machines use various techniques, including machine learning and deep learning, to analyze data, identify patterns, and make predictions, impacting fields like healthcare, finance, and transportation. While currently not replicating human consciousness, AI is rapidly evolving and holds significant potential for shaping the future.

AI Image Generation

Product Design

Better Design

Food Photography

Better Design

Textbook Graphics

King of the Jungle

Creative Thinking

A cup of coffee connected to man's head while he drink the coffee, with full of cable and wire, futuristic

AI Music

AI-generated music is music composed with the help of artificial intelligence algorithms. Unlike traditional music creation, these algorithms analyze vast amounts of existing music to identify patterns and structures. Based on this analysis and user input, like desired genre or mood, AI can generate entirely new compositions, including melodies, harmonies, rhythms, and even instrumentation. This technology is used to create unique soundscapes for various purposes, including film scores, video game soundtracks, and even personalized background music.

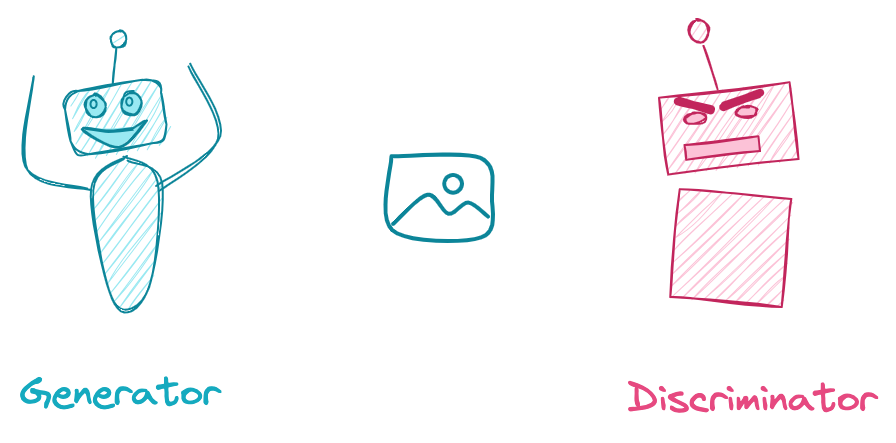

GANS

Absolutely! Let's explore the roles of the discriminator and generator in the fascinating world of Generative Adversarial Networks (GANs), a type of artificial intelligence.

The Discriminator: The Art Critic

- Goal: The discriminator's primary task is to become an expert at distinguishing between real data (e.g., real photos of faces) and fake data (e.g., images of faces generated by an AI).

- Training: It's fed a mix of both real and fake samples. The discriminator aims to get better at correctly identifying whether a given sample is real or artificial.

- Feedback: The discriminator provides feedback to the generator, telling it how well or how poorly it's doing in creating realistic fake data.

The Generator: The Master Forger

- Goal: The generator's job is the opposite: to create fake data that's so convincing it can fool the discriminator into believing it's real.

- Training: It starts off by generating random noise, and over time, it uses the feedback from the discriminator to refine its creations.

- Goal: The generator aims to create outputs that are indistinguishable from real data, essentially tricking the discriminator.

The Adversarial Dance

The discriminator and generator are locked in a continuous competition:

- The Discriminator Judges: The discriminator is shown examples of real data and fake data created by the generator.

- Generator Improves: The generator uses the discriminator's feedback to refine its technique, attempting to create even more convincing fake data in the next round.

- The Critic Becomes Stronger: In turn, the discriminator gets better at telling the real from the fake.

This "arms race" between the two components drives both of them to become increasingly skilled. Over many iterations, the generator eventually becomes capable of producing fake data that's nearly indistinguishable from reality.

Analogy: Think of it like an art forger and an art expert. The forger tries to create convincing counterfeit paintings, while the expert tries to distinguish between the real masterpieces and fakes. The forger keeps getting better with each attempt, while the expert develops an increasingly discerning eye.

Key Points:

- GANs learn without explicit labels. The beauty of GANs is that they don't rely on labeled data, just examples of what you want to generate.

- Applications: GANs are used for creating realistic images, videos, audio, and other types of data for creative applications, simulations, and more.

Let me know if you want to dive deeper into how they work with code examples or discuss specific applications!

Types of GANS

Generative Adversarial Networks (GANs) have spawned a diverse range of architectures, each tailored to specific applications and addressing certain challenges of the original framework. Here's a breakdown of some commonly encountered GAN variants:

1. Vanilla GAN (VGAN):

- The OG: This is the original GAN architecture, introduced in 2014. It consists of a simple generator and a discriminator with basic neural network structures.

- Challenges: VGANs can be susceptible to training instability, leading to issues like mode collapse (generator gets stuck producing a limited set of outputs).

2. Deep Convolutional GAN (DCGAN):

- Addressing VGAN's issues: DCGANs use deep convolutional neural networks in both the generator and discriminator, specifically designed to handle image data.

- Benefits: DCGANs significantly improve the quality and stability of generated images compared to VGANs. They are widely used for applications like image generation and editing.

3. Conditional GAN (CGAN):

- Adding Control: CGANs introduce an additional input to both the generator and discriminator, allowing them to condition the generated data based on this extra information.

- Applications: CGANs are useful for tasks like generating images conditioned on specific labels (e.g., generating images of cats wearing hats) or translating images from one style to another.

4. CycleGAN:

- Image-to-image Translation: CycleGAN enables the translation of images from one domain to another (e.g., translating photos from horses to zebras).

- Unique Approach: It uses two generators and two discriminators working in a cyclic fashion, ensuring consistency between the generated images and the target domain.

5. StyleGAN:

- Generating High-Fidelity Images: StyleGAN leverages a progressive growing approach and advanced architectures to generate high-resolution, photorealistic images.

- Applications: StyleGAN is well-suited for tasks like generating faces, editing existing images, and creating new image datasets.

6. Other Variations:

- Numerous other GAN variants exist, each addressing specific challenges or tailoring the framework for particular applications. Some examples include:

- Wasserstein GAN (WGAN): Improves training stability by addressing some limitations of the original loss function.

- Progressive GAN (ProGAN): Similar to StyleGAN, it uses a progressive growing approach for high-resolution image generation.

- Text-to-Image GANs: Translate text descriptions into corresponding images.

Remember, this is not an exhaustive list, and ongoing research continues to introduce new and innovative GAN architectures pushing the boundaries of what's possible with generative models.